We assure you that no personal information is stored in these cookies and we do not track your navigation.

By continuing to use our site, you accept our use of cookies.

Unlock Full Access!

This feature is part of our CONNECT plan.

Upgrade to unlock the full power of ONE Research Community.

- 🌍 Explore the complete network of potential mentors and collaborators using the Mobility App

- 🔍 Benchmark your performance against peers worldwide

- 🤝 Connect with leading researchers across disciplines and regions

- 📄 Export your full list of publications and key performance indicators

Documentation

Detailed Guidance on Our Methods and MetricsContext

The utilization of bibliometric indicators for research evaluation has long been restricted by limited data access for researchers and funding bodies. This limitation has prompted the search for alternative metrics, often leading to the exclusion of key indicators or the use of more accessible but less accurate substitutes. This situation has sometimes led to a misuse of metrics, as seen with the widespread but often criticized use of the Journal Impact Factor (JIF).

Mission

The ONE Research Community aims to revolutionize research assessment by providing all researchers with access to a broad spectrum of indicators and collaboration and benchmarking tools. These tools are designed to empower researchers to make informed decisions to advance their careers and to foster a deeper understanding of the available metrics.

Item & group oriented indicators

Our bibliometric indicators are categorized into two types: item-oriented and group-oriented. Item-oriented indicators are calculated for individual publications, such as citation counts or the nature of the publication (international, lead-authored, etc.). These reflect direct attributes of a publication.

In contrast, group-oriented indicators require aggregating data across multiple publications by an author, such as total publication counts. These indicators provide a broader view of a researcher’s output and influence, necessitating grouping publications according to specific criteria to ensure accurate assessment..

Item-oriented indicators

Among the item-oriented indicators, citation counts are particularly complex due to the varying citation practices across disciplines, document types, and publication ages. To fairly compare citation counts, we use a detailed method:

Number of citations

Citations are a fundamental measure of a publication’s influence. The number of citations a publication receives can vary significantly based on:

- Research field: Publications in fields like Chemistry might garner more citations compared to Mathematics, but fewer than those in High Energy Physics.

- Type of document: Reviews tend to accumulate more citations than research articles due to their comprehensive nature.

- Publication age Older publications have had more time to accumulate citations.

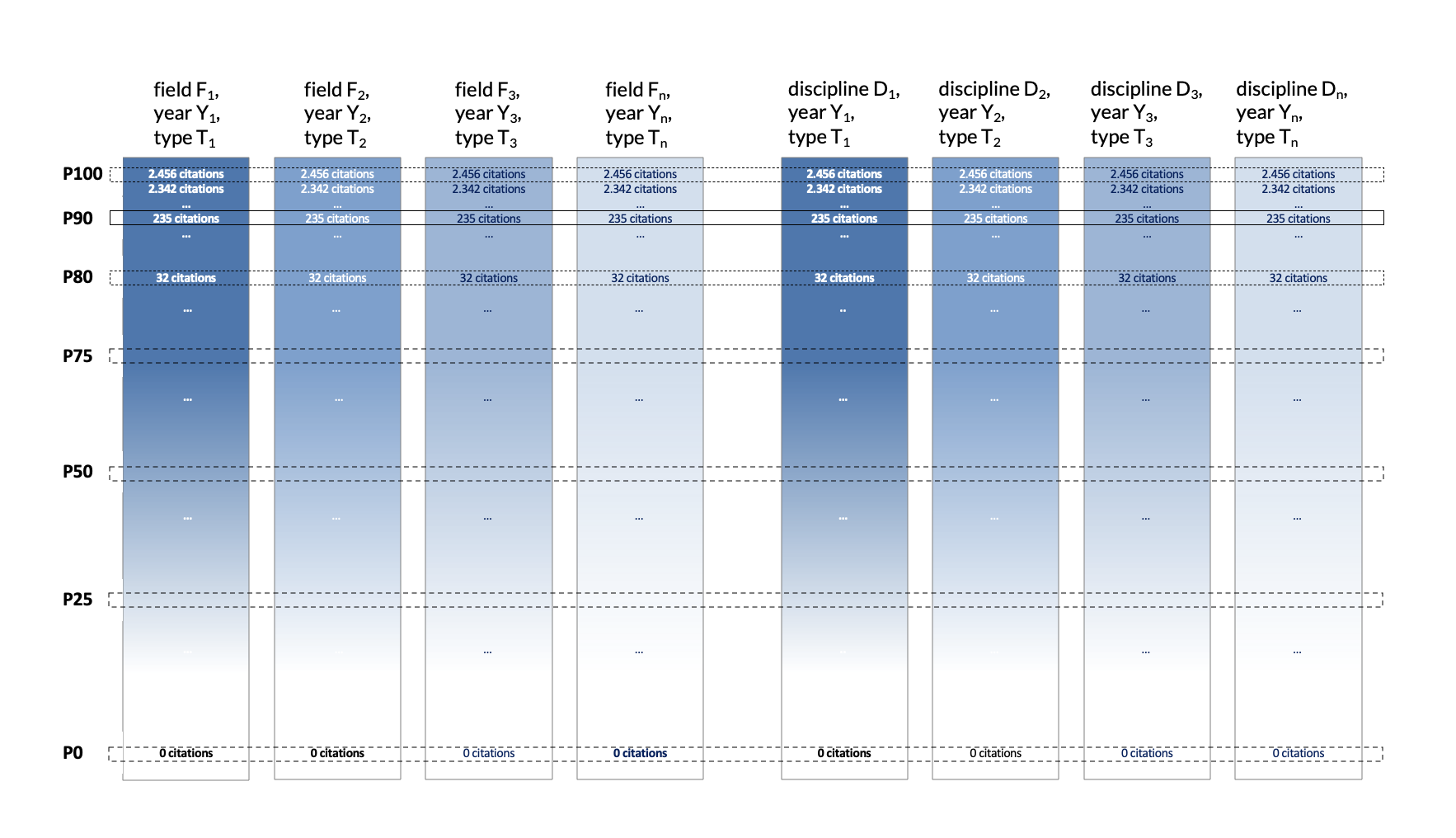

To account for these variables, we rank publications by their citation count within their respective categories (type, year, discipline) and calculate percentiles to assess their relative impact. This approach ensures fair comparisons by adjusting for field-specific and temporal factors.

Ranking Publications by Their Number of Citations

We create hundreds of rankings by grouping publications within each field by discipline, document type, and publication year. Each publication is placed in a percentile within its cohort, allowing us to identify Highly Cited Papers (HCPs) and Outstanding Papers (OPs) based on their percentile rank:

- Highly Cited Papers (HCP): Publications ranking between the 90th and 99th percentiles.

- Outstanding Papers (OP): Publications at or above the 99th percentile.

Expected Values: Reference Values

In bibliometrics, reference values help determine whether an indicator for a publication or author stands above or below average. These values are crucial due to the asymmetric distribution typical of bibliometric data:

- Mean (average): Often used as a reference, unfortunately it is affected by extreme values common in citation counts.

- 90th Percentile: Used internationally to define Highly Cited Papers and represents the top 1% of cited papers by field, publication year and document type.

- 99th Percentile: Used internationally to define what we call Outstanding Papers and represents the top 1% of cited papers by field, publication year and document type.

These reference values enable us to set benchmarks for what constitutes significant academic impact, aiding in the fair evaluation of research output.

Authors' indicators

Group-oriented indicators aggregate an author's bibliometric data to provide insights into their overall research output and influence. These indicators require the compilation of all publications attributed to an author, which involves complex identification processes:

Identifying the Actual Researchers

Correctly associating publications to authors is a challenge due to common issues like name synonyms and homonyms. With the introduction of systems like ORCID, which provide unique identifiers, and our own sophisticated algorithms, we have significantly improved the accuracy of author identification. Despite these advancements, minor discrepancies can occur, often only rectifiable by the authors themselves.

Counting Method

Each publication attributed to an author counts fully towards their total output. This total count method ensures that all recognized contributions are acknowledged equally, reflecting the collaborative nature of scientific work.

Main Field and Years of Scientific Activity

We assign authors to fields based on the most frequent classification of their publications, with allowances for secondary disciplines if they constitute a significant portion of an author's work. The years of scientific activity are calculated from the first to the last publication, providing a timeline of the author's active research period.

Indicators & Dimensions

The indicators within the ONE Index are carefully organized into distinct dimensions, each aimed to capture different facets of a researcher's academic and societal contributions. Within each dimension, indicators are assigned specific weights, allowing for tailored emphasis on aspects deemed most relevant to different research areas. This weighted system facilitates a nuanced evaluation, enabling a more detailed and area-specific assessment of research impact.

List of indicators updated at December 2024

Invitation for Community Engagement

We acknowledge that the current system, while comprehensive, is not without its imperfections. In our pursuit of a fair and universally accepted assessment tool, we invite the research community to engage in ongoing dialogue and contribute to the refinement of the ONE Index.

This platform is meant to evolve through community input, reflecting a broad spectrum of academic activities and values. Our current framework is a humble contribution, intended as a foundation for a community-driven, equitable research assessment tool.

Comparing authors' indicators

The process of comparing authors within the ONE Index is designed to ensure a fair and contextually relevant assessment by considering similar cohorts of researchers. This methodology allows for meaningful comparisons by aligning researchers with their peers who share similar backgrounds and research trajectories.

Cohort Definition

Researchers are grouped into cohorts based on two factors:

- Research field: Classification follows the academic field and subfield taxonomy from OpenAlex..

- Years of Activity: Number of years since the first recorded publication, providing a proxy for career stage.

Scoring System

- 1) Rank per Indicator – For each indicator, all cohort members’ values are ordered from lowest to highest. Each researcher’s rank position in that list becomes their score for that indicator.

- 2) Dimension Score – Indicator ranks are averaged to produce a score for each dimension within the framework.

- 3) ONE Index – Dimension scores are averaged to produce the final ONE Index score.

- 4) Custom Weights – Weights can be applied at the indicator or dimension level to adapt the framework to the priorities of institutions, funders, or specific programs.

Why This Matters

- Contextual fairness – Researchers are assessed only against truly comparable peers.

- Meaningful scores – A standalone value is meaningless unless compared to a relevant reference; cohort ranking provides that reference.

- Adaptability – Stakeholders can weight dimensions to reflect their strategic goals.

Dynamic Adjustment

- Adaptive Weights: Weights assigned to each indicator can be adjusted to reflect changes in disciplinary standards or the evolving nature of research fields, allowing the ONE Index to remain relevant and accurately reflective of current academic values.

- Flexible Cohort Windows: For cohort formation based on years of activity, younger researchers are grouped within narrow windows to ensure that comparisons are made among individuals at similar career stages. Conversely, for senior researchers with extensive years of activity, such as those over 25 years, the cohort windows are less restrictive. This flexibility recognizes that the impact of a few years more or less of activity diminishes as career length increases, allowing for fairer comparisons among more established researchers.

Invitation for Community Engagement

At the ONE Research Community, we believe in the power of collective wisdom to create tools that are both fair and effective. We invite all stakeholders in the academic community—researchers, administrators, funders, and policymakers—to participate in an ongoing dialogue to refine and enhance the ONE Index. Your insights, critiques, and suggestions are invaluable to ensuring that our tool evolves in ways that are most beneficial and relevant to the diverse needs of the global research community.

Together, we can build an assessment system that not only measures but also meaningfully contributes to the advancement of knowledge and innovation. Join us in this collaborative effort to shape the future of research assessment.

Structure of the ONE index

The One Index is a weighted measure that captures the breadth and depth of a researcher’s contributions across ten dimensions of academic and societal impact. Designed for clarity and precision, it empowers researchers, institutions, and decision-makers to assess and communicate research achievements effectively.

At the heart of the One Index are three supra-dimensions: Performance, Interaction within the Scientific Community, and Interaction with Society. Together, they encompass the diverse roles of researchers and the many ways their work influences the world.

- The Performance dimension reflects the quality, influence, and originality of a researcher’s work. Indicators such as normalized impact, highly cited publications, and sustained productivity paint a vivid picture of scholarly contributions. While still under development, the Innovation and Originality metrics will soon quantify a researcher’s ability to open new paths in science.

- The Interaction within Scientific Community dimension showcases a researcher’s role within the academic ecosystem. Metrics like international collaborations, leadership roles, and contributions to community support—such as peer review and mentoring—capture their influence beyond individual research outputs.

- Lastly, the Interaction with Society dimension highlights a researcher’s ability to translate academic knowledge into real-world applications. Patents, industry collaborations, public outreach, and successful fundraising efforts demonstrate the broader societal and economic impact of their work.

Each of these dimensions includes specific, actionable indicators tailored for easy interpretation. By combining these into a single, weighted score, the One Index offers a comprehensive view of a researcher’s impact—both within academia and beyond.

*Currently in development.

Handouts & Quick Guides

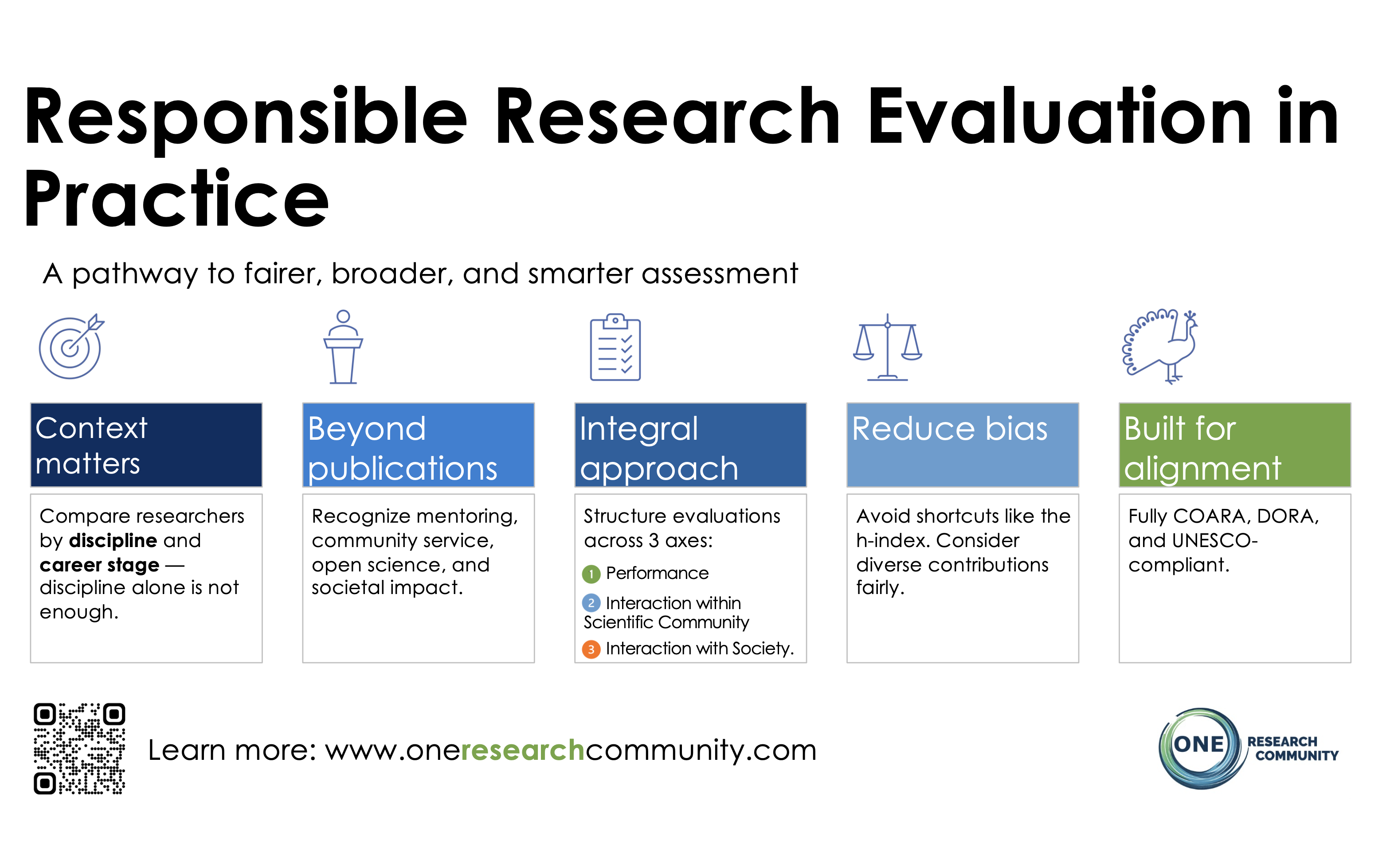

Fair, Inclusive & Smarter Evaluation

Discover how the ONE Framework redefines research assessment beyond outdated metrics.

ONE Framework

Get to Know Our Integral, Fair & Transparent Research Assessment: 3 Axes, 11 Dimensions & 70+ Indicators Behind the ONE Index

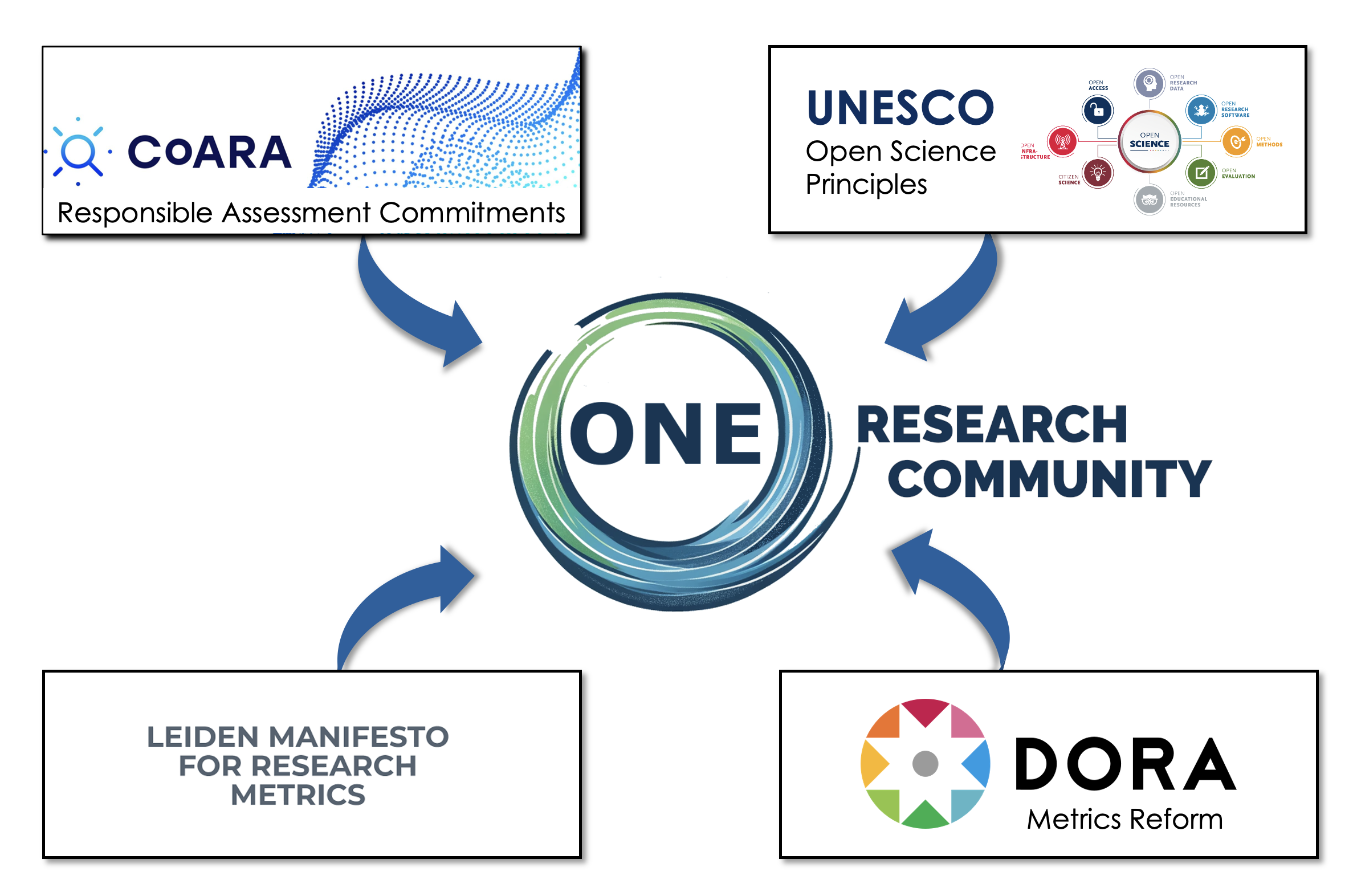

From Principles to Practice: ONE’s Global Alignment

ONE integrates the principles of COARA, UNESCO, DORA, and the Leiden Manifesto into a single operational framework.

Badges & Recognition

TL;DR

- Badges make profile information visible and interpretable.

- They reflect system status, declared roles, professional development, or public commitments.

- Badges are optional and not rankings or quality scores.

- Most badges are unlocked by completing profile sections or accepting pledges.

- Badges help others quickly understand who you are, what you do, and what you stand for.

Badges in ONE Research Community highlight profile status, declared roles, professional development, and public commitments. They are designed to make profile information more visible and interpretable, promote transparency and responsible research practices, and encourage meaningful profile completion.

Badges are optional and are not rankings or quality scores.

What badges are (and are not)

Badges are:

- Signals derived from declared information, system status, or public commitments.

- Visible markers that help others understand a researcher’s profile at a glance.

Badges are not:

- Performance evaluations.

- Quality rankings.

- Endorsements by ONE or third parties.

Badge categories

Badges are grouped into four categories, each with a distinct meaning:

- Profile status – System-recognised states related to profile identity and trust (e.g. claimed or peer-recognised profiles).

- Commitments & transparency – Voluntary public commitments and contextual information that support responsible and fair research assessment.

- Roles & contributions – Declared roles and activities such as peer review, mentoring, advising, or entrepreneurship.

- Development & profile completeness – Signals related to structured self-reflection and competence-based profile development.

Badge examples

Below are examples of badges available in ONE Research Community. Some badges may appear greyed out until the corresponding profile information or commitment is completed.

Claimed Profile

System status

Referred Profile

Peer recognition

Validated Profile

Higher peer validation

Trusted Contributor

Sustained contribution

Integrity Pledge

Public commitment

Zero Tolerance

Public commitment

Inclusion Supporter

Community support

Career Interruption Aware

Context & transparency

Registered Reviewer

Declared role

Declared Mentor

Declared role

Declared Advisor

Declared role

Entrepreneur

Declared activity

Competence Profile

Structured self-assessment

Badge list

Profile status

- Claimed Profile – Profile has been claimed and confirmed by the researcher.

- Referred Profile – Profile has been recognised by peers within the community.

- Validated Profile – Profile has reached a higher level of peer validation.

- Trusted Contributor – Recognised for sustained positive contributions to the research community.

Commitments & transparency

- Integrity Pledge – Public commitment to responsible research practices and ethical conduct.

- Zero Tolerance – Commitment to zero tolerance for harassment, discrimination, and abuse in research environments.

- Inclusion Supporter – Reports activities supporting inclusion, mentoring, and career development of others.

- Career Interruption Aware – Provides contextual information on career interruptions to support fair evaluation.

Roles & contributions

- Registered Reviewer – Declares availability and eligibility to participate in peer review.

- Declared Mentor – Declares mentoring or supervision of early-career researchers.

- Declared Advisor – Declares advisory or senior guidance roles.

- Entrepreneur – Declares entrepreneurial or venture-related activity.

Development & profile completeness

- Competence Profile – Indicates completion of a structured competence self-assessment.

How badges are obtained

Badges are obtained through:

- Claiming and completing profile sections.

- Submitting specific forms (e.g. reviewer preferences, competences).

- Accepting public pledges.

- Reporting relevant professional activities.

Some badges reflect system status, while others reflect declared information provided by the researcher.

Data sources & transparency

Badge states are derived from structured profile data entered by the researcher, system events (such as profile claiming), and publicly accepted commitments. The underlying data remains accessible and editable by the profile owner.

Future evolution

Badges may evolve over time to incorporate additional context, peer confirmation, or activity-based signals. Any future verification layers will be clearly indicated and documented.

Badges reflect declared information, public commitments, or system status. They are not rankings or quality assessments.